By Mpai Mollo

If you have accessed the internet at all in the last few months, you would have seen or even interacted with an AI tool. From Meta AI on WhatsApp and Instagram, to Gemini on Google and Co-pilot on Bing, it appears as if AI applications have taken over our screens overnight. People are divided over whether the rise of AI apps is a good thing, if it’s something that can serve us and only benefit society or if the massive increase in such tools is going to result in robots “taking over” and doing all the jobs that humans used to do.

Tools like ChatGPT, DALL-E, Jasper and Writesonic have made it easier to do a plethora of tasks- from designing recipes for gluten-free, dairy-free, vegan-friendly desserts or writing up your English literature review the day before it’s due, to more complex tasks like coding a website for your online business or drawing up a storyboard for an episode of a hit TV series. Many are worried that we are becoming more dependent on AI and relying less and less on creative, multifaceted human thinking that offers a unique perspective that artificial intelligence cannot quite get right.

However, I am of the opinion that AI can be a useful tool when not trying to imitate human likeness, but rather focusing on what it is best at- analysing and processing large amounts of data and identifying patterns therein. Academics at the University of South Australia (UniSA) and Middle Tech University (MTU) seem to agree as they are using AI for purposes that may be far more complex than what even the developers who designed these programmes thought they could and would do. Researchers at UniSA are using a trained computer model to screen for diseases that previously required invasive, lengthy and/or costly testing and could only be diagnosed once the disease presented with certain symptoms- at which point it may be too late to treat.

How does this work?

A webcam is placed 20cm in front of a patient and a picture is taken of the patient’s tongue. The image of the tongue is then analysed for colour, shape and thickness using previously uploaded images of different tongues from different disease states, the computer-based AI model can match the pigment of the tongue to a specific disease. Professor Al-Naji stated that this algorithm uses a technique used in Traditional Chinese Medicine where “examination, smell, auditory evaluation and physical palpation” can be used to investigate and extricate specific information about a person’s health status. The tongue is thought to form part of an interconnected network with the body’s other organs and thus, its unique features and topographies are able to signal ailments in these other systems.

So far, this model has been able to diagnose diseases like asthma, anaemia, diabetes, stroke and even cancer with 98% accuracy!

Background around the development of diagnostic algorithm

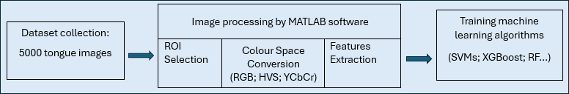

This study builds on previous work done by various research groups whereby different tongue features were examined in order to detect the incidence of prediabetes, diabetes, or COVID-19 infections. In the study done by academics at UniSA and MTU, the research teams began by training several machine learning algorithms by feeding them over 5000 images of different coloured tongues and their associated diseases.

Figure 1: Table showing tongue colour and associated disease. Adapted from Hassoon et al Table 1.

These images were processed by MATLAB (an image processing software) to single out the Region of Interest and classify its colour using several colour space conversion models.

These models include RGB (red, green, blue) commonly used in cameras and televisions, and Human Visual System (HVS) which mimics how the human eye perceives colour. The data was then fed to the various machine learning algorithms for training and ultimately, the best performing trained model was saved and selected to test whether it could predict certain diseases.

Figure 2: Flow map showing workflow used to train various machine learning algorithms. Adapted from Hassoon et al Figure 2.

60 images of tongues were captured by the live imaging system webcam, the image was processed with MATLAB using the XGBoost algorithm and the tongue colour was then assessed in order to determine whether the tongue appeared normal (healthy) or abnormal (disease state). In the case of disease, the model was then able to predict the specific affliction based on the perceived colour. These images were taken from patients that had already been diagnosed, therefore scientists were able to tell whether the machine got the right or wrong diagnosis in real- time. The XGBoost MATLAB user interface was able to accurately diagnose 58 out of 60 images resulting in a whopping 96.6% accuracy!

Figure 3: Diagram illustrating how trained algorithm (XGBoost) will diagnose based on perceived tongue colour. Adapted from Hassoon et al Figure 2.

Why is this significant?

With more refining, this model can be used to diagnose thousands of patients with diseases that may still be in relatively early stages at a more affordable cost. This means that invasive surgeries such as biopsies and bloodwork may no longer be required to diagnose specific disorders; underfunded hospitals may be able to use digital cameras to diagnose patients without the need for expensive machinery such as X-rays or MRIs and conversely, individuals that live far from hospitals or clinics can still get accurate diagnoses using cameras on their smartphones. This technology will be a tremendous use of AI showing how it can be used for the greater good of humanity; just imagine a future where you can get a diagnosis simply by taking a selfie!

Article adapted from (Hassoon et al, 2024) “Tongue Disease Prediction Based on Machine Learning Algorithms”

Hassoon, A.R.; Al-Naji, A.; Khalid, G.A.; Chahl, J. Tongue Disease Prediction Based on Machine Learning Algorithms. Technologies 2024,12,97. https://doi.org/10.3390/technologies12070097

Leave a comment